A useful and creative AI system for UI and UX design

So, the hype is up and everyone is talking about the disruptive AI called DALL·E 2. Speaking of DALL·E 2, which is an update to open AI’s Text to Image generator called the DALL·E 1. DALL·E 1 by itself was exceptional and produced stunning photo-realistic results. The main difference between DALL·E 1 and DALL·E 2 is the inbuilt algorithmic models used by both.

What does DALL·E do?

Let’s try to understand exactly what DALL·E does. In simple terms, DALL·E is an AI that takes input in the form of natural language text and converts it into a photorealistic image with multiple variations and in different styles of art all within 10 SECONDS! It sounds astonishing yet a little spooky at the same time. However unreal and mysterious this sounds at first, we have to test how far it justifies the input to the output. We also have to let you know that DALL·E 2 is a research project by OPEN AI and is not available for public/customer use yet, although they have been giving the keys to only a specific set of people for internal testing. Over a few months, many people have posted content on how Dalle performed according to their prompts.

So, here are a few interesting examples of the input vs the output.

PROMPT ONE — “Surrealistic golden doodle puppy in play position”

PROMPT TWO — “a dream-like oil painting by Renoir of dogs playing poker”

PROMPT THREE — “a potato wearing a trench coat in a heroic pose, 3D digital art”

By now, you could see really how powerful AI is and the stunning results it can produce in a short span of time with a manual natural language image description as an input. This leads to the question of how does DALL·E perform a task like this or what is the algorithm with which it runs? Let’s find out!

How does DALL·E work?

DALL·E has two different AI models which make it function. The first model is called CLIP, which helps DALL·E to understand various concepts. For example, when we enter a prompt ‘Car’, CLIP helps us understand what a Car is by studying multiple images of it. In this way, DALL·E understands various concepts like a white car, a blue car, .etc, and various other concepts.

With the help of a CLIP, DALL·E collects various references. But how does it render into a beautiful final image just as we would expect? That’s where DIFFUSION comes into play. So, DIFFUSION is a process of converting a blurred image into a final render.

The diffusion model has been built by learning to blur a clear image. In this way, the model learns how a blurred image will look clean thereby applying the reverse algorithm over here, DALL·E converts abstract ideas into clear rendered images within a short period.

How does a human designer work?

Reading the above facts makes us understand that DALL·E is super powerful. Let’s try to understand how a designer works in a similar scenario when a prompt is given. The general process of a designer is something like this:

Step 1 — Understand the prompt.

Step 2 — Look for references suitable for the prompt.

Step 3 — Ideate a suitable way to bring everything together.

Step 4 — Using design software and inbuilt tools to manipulate the references collected and make them a single picture.

And this process usually takes a day for a designer. You can see from the above steps that DALL·E performs and functions in a pretty similar manner to a human being and that is why this technology cannot be ignored. ( Steps 1 and 2 are closer to the function of CLIP, and steps 3 and 4 are closer to the function of DIFFUSION )

Can DALL·E replace designers?

Though DALL·E looks very competent in the aspect of creating multiple outputs for a prompt in a short period, it has a downside to it as well in terms of usage.

- DALL·E 2 can lead to the creation of Deep Fakes. A deep fake is an ability for AI to fabricate videos and photos by convincingly changing the face on a photo to be someone else (e.g. you could create a video that shows someone famous performing a criminal act, etc). However, Open AI is adding safeguards to prevent this type of misuse.

- DALL·E 2 is poor in compositionality, which is the meaningful merging of multiple object properties, such as color, shape, and position in the image. As per some tests done to analyze this, DALL·E 2 fails to understand the logical relationships given in the descriptions and therefore arranges colored cubes incorrectly.

- DALL·E 2 could not handle negations. For example, a prompt with a spaceship without an apple leads to an output of a spaceship with an apple. Also, it has problems with counting, whereas DALL·E cannot accurately count beyond 4.

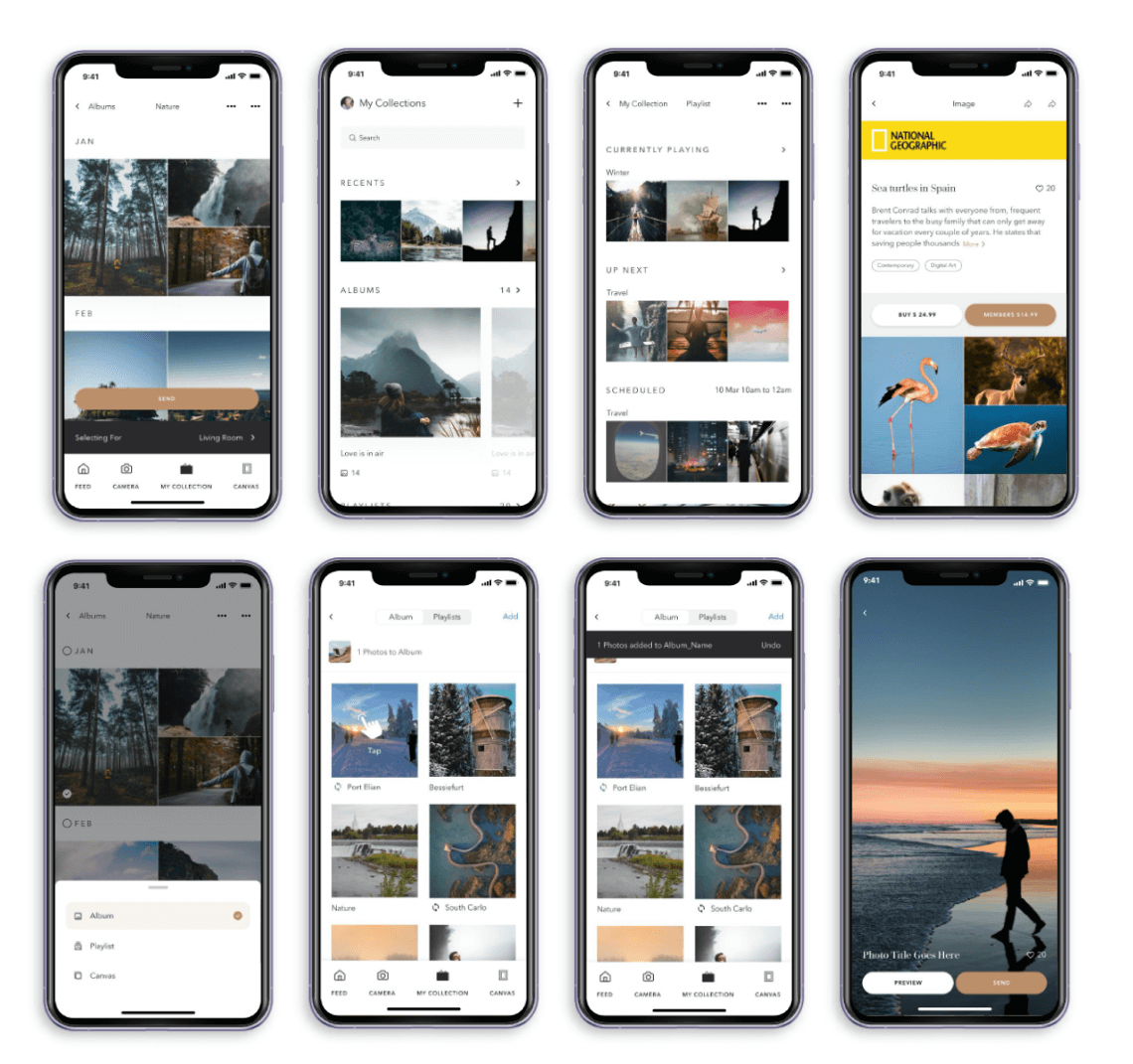

There are many tools available for graphic designers, some of which include adopting AI to accelerate productivity. For example, Adobe Photoshop now offers several AI-powered algorithms to aid graphic designers with the process of image processing like removing glare, noise, and image manipulation like changing background, etc. These AI-powered features do save a lot of time, but they do not necessarily create original content.

DALL·E on the other hand helps us create original content though there are a few glitches in various scenarios. Nevertheless, we are just seeing the 2nd version of this technology and we are sure there are going to be massive updates that will include all areas of the design leaving us with a lot to look forward to.

Verdict: Can DALL·E 2 replace humans?

There have been various case studies conducted in a similar manner where a designer is made to work against DALL-E. With infinite time, designers are capable of producing results that DALL·E cannot produce given the amount of time.

In the end, DALL·E is a technology created by humans and it has its limitations as well. Though this technology can be very very powerful and help designers in brainstorming potential creative ideas, it looks like a long way for DALL·E to completely replace the UI and UX designers. With the right use of the collaboration of DALL·E with the designers, the workflows can be improved, expedited, and creativity can be enhanced. In the end, every technology ever invented should serve the purpose of minimizing human effort and DALL·E does the same. Kudos to the creators.